Micrograd CUDA

When I started out in engineering some years ago and coming from a french schooling system, I had this attitude of taking a lot of established things for granted. I think it’s pretty common for europeans to have an epiphany, especially after visiting SF, when realizing that agency is a thing and that the stuff we use doesn’t just spawn out of thin air.

In that same vein I recently felt like I was overly reliant on clean abstractions for my ML work. In a way it’s pretty incredible to be able to summon models by thinking purely in terms of context, attention heads, and utility functions. But, as Greg puts it:

a great way to increase your impact as a machine learning engineer is to learn the whole stack; that way you can make any specific change or debug any part of the system without blocking on time from others

— Greg Brockman (@gdb) March 21, 2024

One pretty wide gap in my understanding was how GPUs really worked. It was one hell of a big scary spider of a gap. But this year Denis taught me that fear is the mind killer. Truly, because it turned out to be massively easier than I thought. In retrospect I’m not even sure what I was scared of.

If you’re reading this and can relate I highly recommend the book Programming Massively Parallel Processors, it’s a great place to start. As I was going through the book I remembered about Andrej Karpathy’s micrograd. Again both a quick and delightful way to anchor in some autodiff concepts.

Here’s micrograd-cuda, my own cuda-seasoned flavor of micrograd. I coded it up last weekend to materialize the concepts of the book. It’s a little bit longer than the original repo, but still suprisingly easy to read for something I used to think of as complex.

Here’s the recipe:

- Massage micrograd’s Value until you get a Tensor, and change all the corresponding scalar algebra to tensor algebra.

- Incorporate batching of inputs to fully leverage matmul acceleration.

- Make sure to not undercook your gradients: let them flow.

- Mix in some cpu/gpu transfer, pointer handling logic, and tensor shape passing.

- Let the python rest and cook up your cuda kernels.

- Add in the python bindings to your kernels.

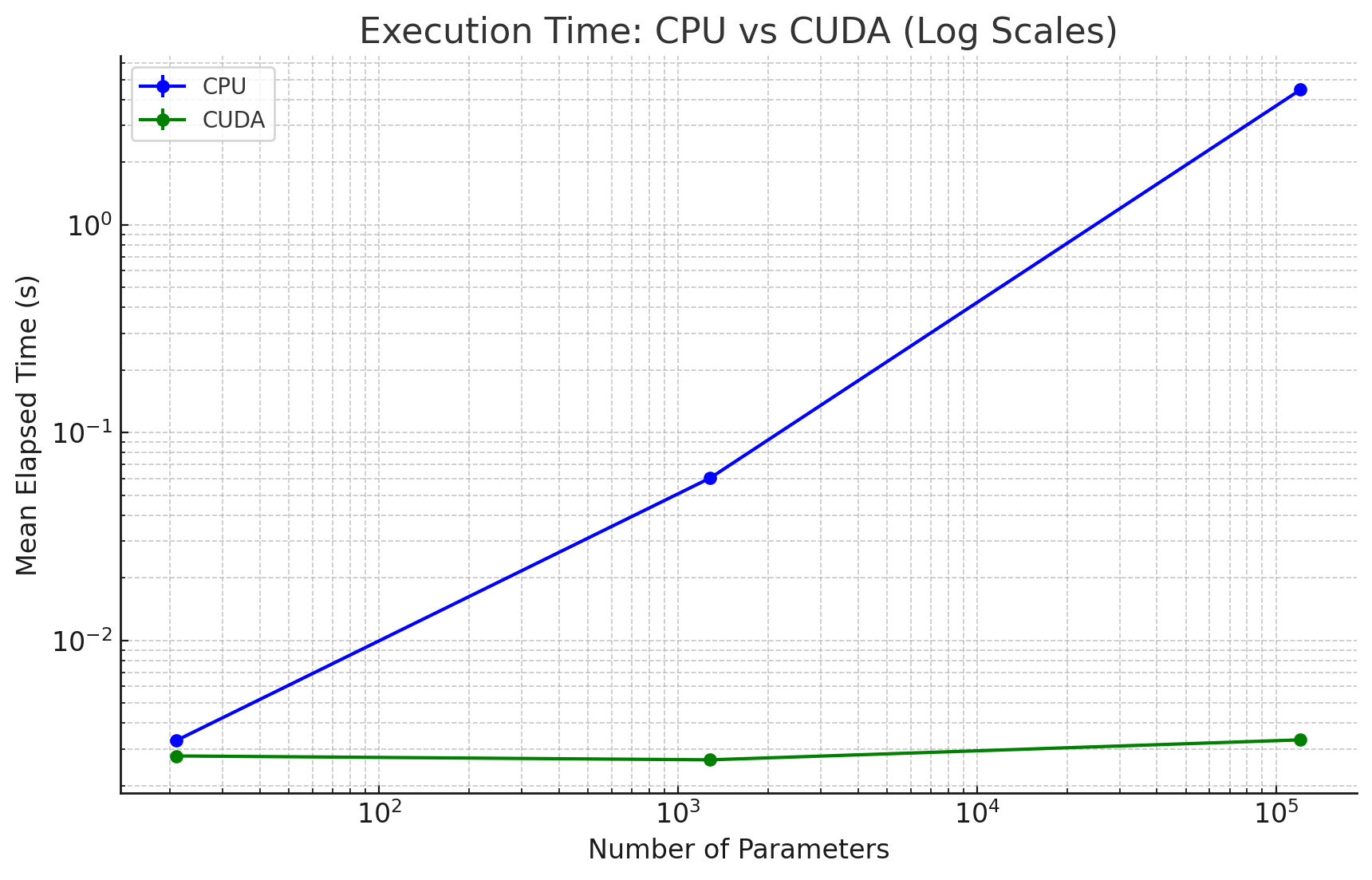

- Enjoy:

Anyway, take a look at the repo, I hope that it helps you learn something and appreciate GPUs even more!

Here’s the Hacker News thread.